With the advance of sensor technology, existing anti-spoofing systems can be vulnerable to emerging high-quality spoof mediums. One way to make the system robust to these attacks is to collect new high-quality databases. In response to this need, we collect a new face anti-spoofing database named Spoof in the Wild (SiW) database.

Database stats

SiW provides live and spoof videos from 165 subjects. For each subject, we have 8 live and up to 20 spoof videos, in total 4,478 videos. All videos are in 30 fps, about 15 second length, and 1080P HD resolution. The live videos are collected in four sessions with variations of distance, pose, illumination and expression. The spoof videos are collected with several attacks such as printed paper and replay. More details are in Section 4.

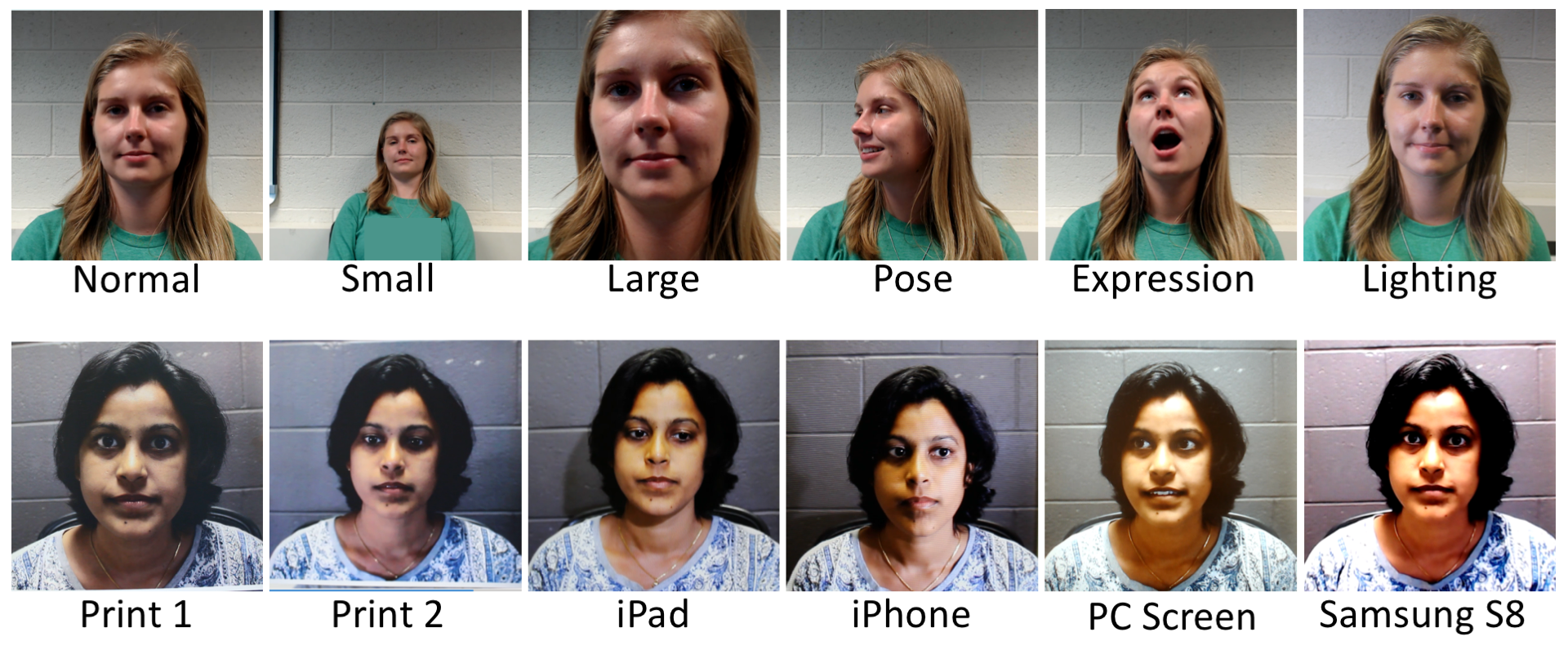

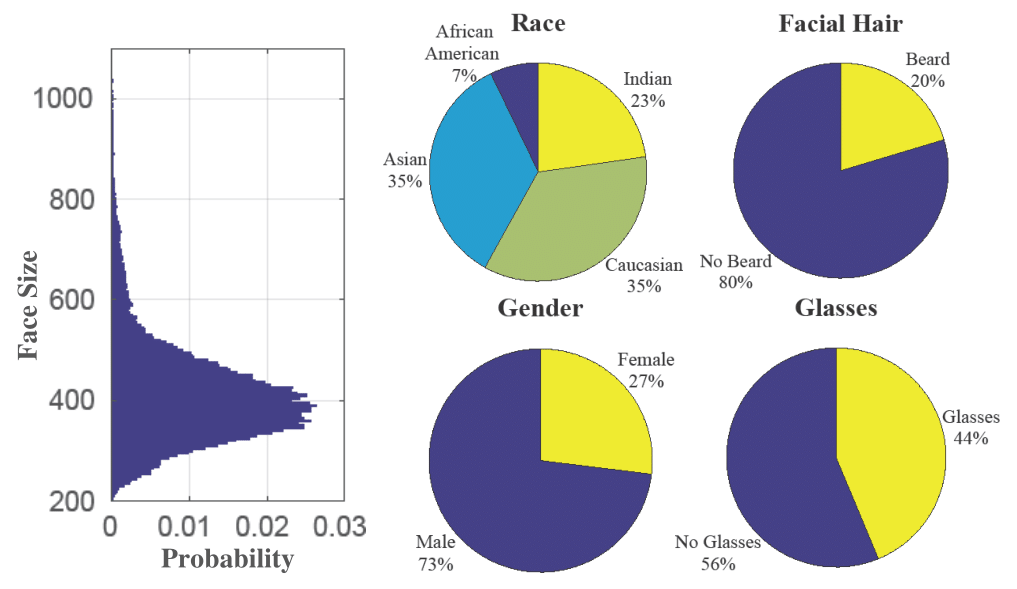

Figure 1: Example live (left top) and spoof (left bottom) videos in SiW. The statistics of the subjects in the SiW database on the right figure.

Naming

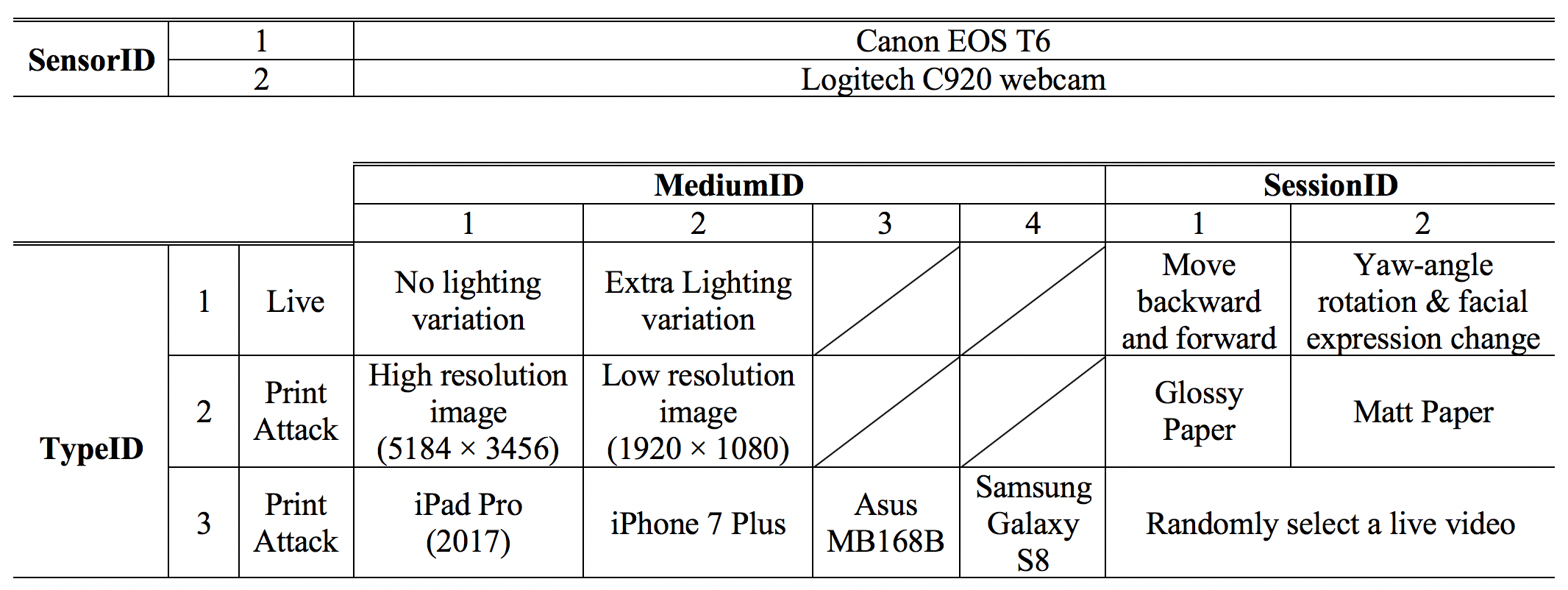

All the video files are named as SubjectID_SensorID_TypeID_MediumID_SessionID.mov (or *.mp4). SubjectID ranges from 001 to 165. SensorID represents the capture device. TypeID represents the spoof type of the video. MediumID and SessionID record additional details of the video, shown in the Figure 2.

We also provide the face bounding box file with the same name of the corresponding video (i.e. SubjectID_SensorID_TypeID_MediumID_ SessionID.face). In each *.face file, it contains a 4-by-n matrix, where n is the frame length. Each row records the (x,y) locations of the upper left corner and the botton right corner of the current bounding box, such as [785 425 1070 710]. [0,0,0,0] means no face detected.

Figure 2: Naming of the SiW database. For example, 001-1-1-1-1.mov represents the live video taken from subject 001 by Canon EOS T6, and subject is moving backward and forward during the video recording but with no extra lighting variation.

Evaluation protocols

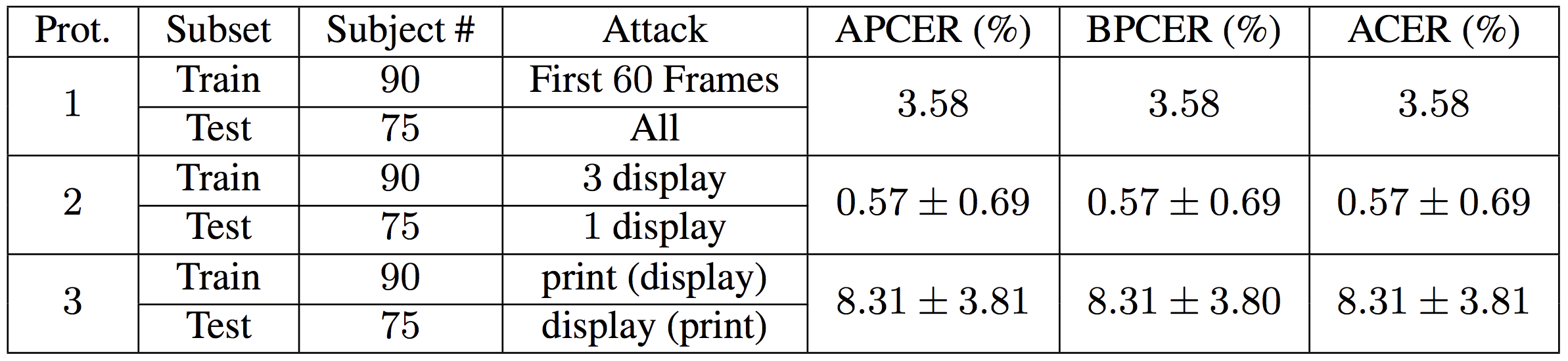

To set a baseline for future study on SiW, we define three protocols for SiW:

The first protocol is designed to evaluate the generalization of the face PAD methods under different face poses and expressions. To train your model, you can only use the first 60 frames of the training videos that are mainly frontal view faces, and test on all testing videos.

The second protocol evaluates the generalization capability on cross medium of the same spoof type, i.e. replay attack in this protocol. In this protocol, we adapt a leave-one-out strategy: we train on three replay mediums and test on the fourth one. Each time we leave a different medium out, and report the mean and standard deviation of four scores.

The third protocol is designed to evaluate the performance on unknown PA. Based on this database, we design a cross PA testing from print attack to replay attack and vice versa.

In the following figure, we present the training set of each protocol, and the corresponding baseline performance.

Figure 3: The baseline performance on three protocols of SiW.

Download

1. SiW database is available under a license from Michigan State University for research purposes. Sign the Dataset Release Agreement (DRA).

2. Submit the request and upload your signed DRA at Online Application.

3. If you are unable to access Google Server in Step 2, please send an email to siwdatabase@gmail.com with the following information:

- Title: SiW Application

- CC: Your advisor's email

- Content Line 1: Your name, email, affiliation

- Content Line 2: Your advisor's name, email, webpage

- Attachment: Signed DRA (Wrong formats may cost longer time to process!)

4. You will receive the download username/password and instructions upon approval of your usage of the database, and you can download SiW database within 30 days from approval.

Acknowledgements

If you use SiW database, please cite the paper:

-

Learning Deep Models for Face Anti-Spoofing: Binary or Auxiliary Supervision

Yaojie Liu*, Amin Jourabloo*, Xiaoming Liu

In Proceeding of IEEE Computer Vision and Pattern Recognition (CVPR 2018), Salt Lake City, UT, Jun. 2018

Bibtex | PDF | arXiv | Poster